“Nvidia is an AI infrastructure company, not just ‘buy chips, sell chips”

- Jensen Huang

The rapid acceleration of Artificial Intelligence (AI) and Generative AI (GenAI) adoption is placing unprecedented demands on enterprise infrastructure, spanning compute, storage, and network capacity.

As organizations scale AI initiatives from pilot to production, it’s increasingly evident that conventional Information Technology (IT) architectures have limitations when supporting the performance and scalability needs of AI workloads. To meet the growing intensity of training and inference, enterprises are increasingly turning to purpose-built, high-performance infrastructure as a critical enabler of AI deployment and operational integration.

This intensifying focus on AI infrastructure has created a new strategic core in the tech ecosystem, and no company is more aggressively positioning itself at the heart of it than NVIDIA.

What began as a Graphics Processing Unit (GPU) company is now aiming to become the full-stack foundation for AI, touching every layer of the AI value chain. When Jensen Huang took the stage at GTC 2025, it wasn’t just about new chips – it was a bold vision of NVIDIA becoming the operating system of the AI economy.

This blog unpacks NVIDIA’s full-stack strategy, the implications for the ecosystem, and the strategic questions now facing vendors, service providers, and enterprises.

Reach out to discuss this topic in depth.

Inside Nvidia’s playbook: Signals, stakes, and shifting ground

Nvidia aims to dominate the AI stack – but in a fast-changing ecosystem, can it keep the crown?

At GTC 2025, Nvidia advanced its full-stack AI strategy with new offerings in compute (Blackwell Ultra), networking (Spectrum-X, Quantum-X), and software (Dynamo), aiming to cover all stages of AI development. While Nvidia’s end-to-end AI stack strengthens its platform play, the broader ecosystem is evolving rapidly, with growing alternatives from hyperscalers and agile startups. As concerns around vendor lock-in grow, Nvidia’s vertically integrated model may face resistance from enterprises seeking greater interoperability and cost flexibility. In short, Nvidia’s end-to-end AI stack could be its biggest strength or, if not carefully managed, its most exposed point of competition.

Nvidia sets the stage for physical AI – now comes the test of real-world readiness

Nvidia is expanding its physical AI ecosystem through its “three computers” framework, offering dedicated tools for each stage: the GR00T N1 foundation model for robotics, Omniverse Mega for simulation, and DRIVE AGX for in-vehicle AI deployment. It has also introduced two new blueprints, built on Omniverse and Cosmos, to give developers the platforms for refining robots and autonomous systems.

Through these announcements, Nvidia aims to lower entry barriers and embed itself across the physical AI pipeline, positioning itself as an essential provider. But ecosystem readiness remains uncertain, and success depends not just on technology, but on alignment, standardization, and industry trust.

Nvidia targets both individual and enterprise AI markets – but how will personal hardware measure up to cloud-based alternatives?

Nvidia unveiled its Deep GPU Xceleration (DGX) Spark and DGX Station personal AI systems, powered by Blackwell Ultra chips, designed to support developers, researchers, and data scientists working with advanced AI workloads. The launch underscores Nvidia’s two-pronged approach: catering to individual innovators while maintaining its stronghold in enterprise-scale AI infrastructure. However, with cloud-based alternatives offering flexible GPU access and inference-as-a-service, a key question emerges – will developers and smaller teams prefer the scalability and potential cost advantages of the cloud over investing in high-end local hardware?

As sustainability becomes a priority, will liquid cooling play a key role in the future of data centers?

At GTC 2025, Nvidia highlighted a key trend in the future of data centers: the growing shift toward liquid cooling. As AI models scale and GPU power demands increase, traditional air-cooled systems are reaching their thermal and efficiency limits. Nvidia’s next-generation hardware, including Blackwell-based systems, reflects this shift, with designs increasingly optimized for liquid-cooled data centers. The transition points to a broader industry move – where cooling is no longer just a facility concern but a core part of AI hardware architecture.

As AI workloads scale, AI factories are gaining ground – could they redefine what we think of as the modern data center?

Nvidia’s concept of AI factories signals a shift from traditional data centers to infrastructure purpose-built for continuous AI output. While a traditional data center typically handles diverse workloads and is built for general-purpose computing, AI factories orchestrate the entire AI lifecycle, from data ingestion to training, fine-tuning, and, most critically, high-volume inference.

Nvidia’s vision of AI factories marks a significant evolution in computing infrastructure, pointing to a future where traditional data centers may no longer suffice. This raises the question: could AI factories soon replace traditional data centers, as the central hubs of digital progress in an AI-driven world?

Nvidia’s end-to-end play: Gains for one, decisions for many

As Nvidia pushes deeper into the AI value chain, the implications ripple across the ecosystem – from Original Equipment Manufacturers (OEMs) and silicon vendors to cloud providers, service partners, and ultimately, enterprise buyers. The stack may belong to Nvidia, but the strategic decisions now rest with everyone else. By shifting from selling chips to managing the full AI stack, Nvidia aims to position itself as a platform gatekeeper, seeking strategic advantages such as greater innovation control, pricing leverage, and ecosystem integration.

As Nvidia extends its influence, ecosystem players must balance new opportunities with growing challenges around differentiation, flexibility, and strategic dependence. The key question is how the rest of the ecosystem will respond: where to align, where to carve out value, and where to push back. The next section explores what this means for tech vendors and service providers navigating the Nvidia era.

Supply side eco-system in the Nvidia-era

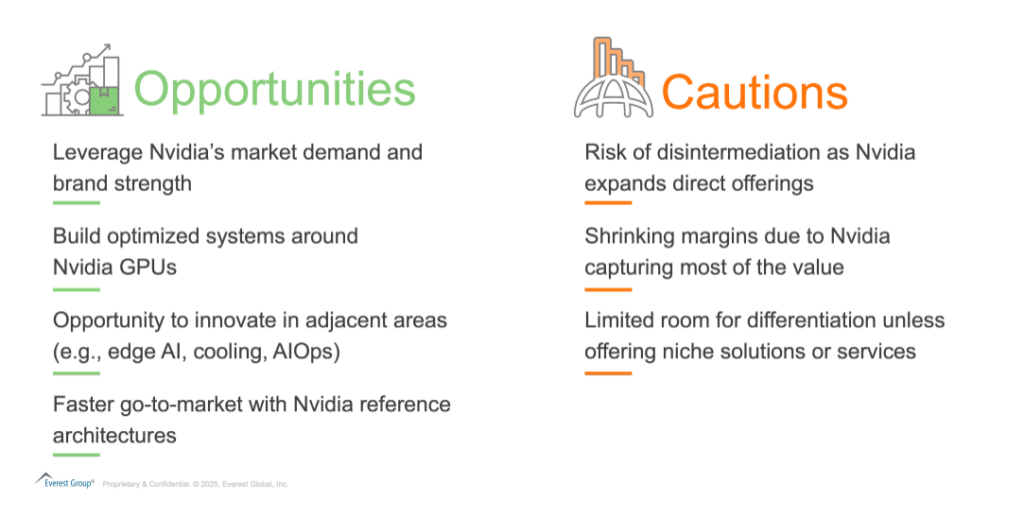

For tech vendors and OEMs:

For tech vendors and OEMs, Nvidia’s platform offers both opportunity and threat. They can build domain-specific offerings, systems, and solutions atop Nvidia’s reference designs. But differentiation is difficult, and margin pressure is growing as Nvidia captures more value higher up the stack.

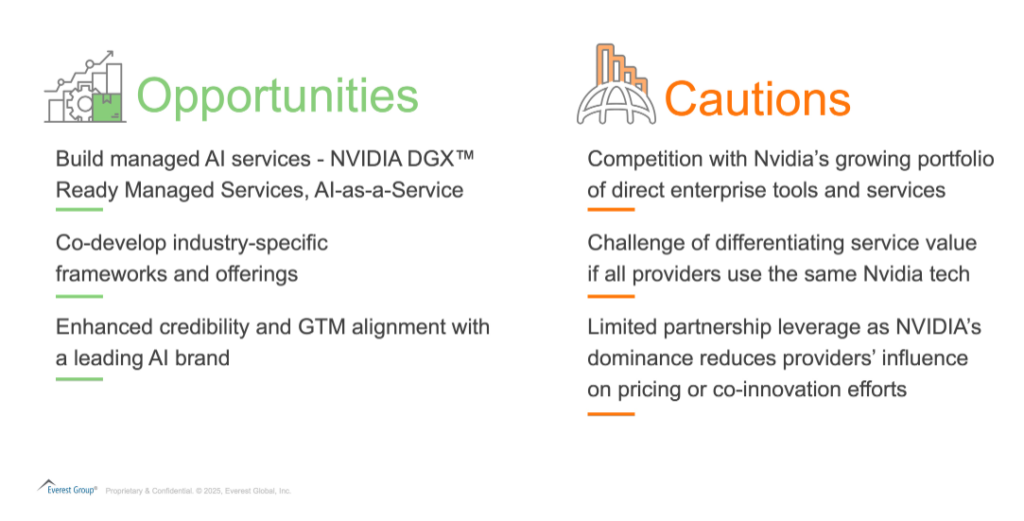

For service providers:

For service providers, the opportunity lies in co-innovation – building enterprise AI, industrial digital twins, and robotics systems using Nvidia’s tools. But relying too heavily on Nvidia risks architectural inflexibility and strategic dependence.

To thrive, players in the ecosystem must be deliberate. They can choose to align with Nvidia but retain the ability to pivot, and value beyond what Nvidia offers as well as explore alternative AI platforms where it makes sense.

Way forward

Nvidia’s AI empire is rapidly consolidating. It now touches every layer of the AI value chain. This moment marks a real inflection point – not just for NVIDIA, but for the entire AI ecosystem. As the stack consolidates and infrastructure becomes increasingly platform-defined, every player in the value chain is being forced to reassess their role.

Tech vendors must decide where they add value in a vertically integrated world. Service providers must evolve from AI enablers to infrastructure orchestrators and strategic partners. Enterprises, the ultimate customers, must grapple with new forms of dependency, complexity, and control as they scale AI from pilots to production. And the industry must confront the trade-offs between performance, openness, and strategic flexibility. The question is no longer how to compete with Nvidia, but how to succeed within an ecosystem it defines.

That reframing leads to deeper strategic considerations for supply-side ecosystem:

- Can you add differentiated value atop Nvidia’s stack?

- How will you maintain pricing power if Nvidia controls the base layer?

- Is a full-stack AI orchestrator the next logical step?

As Nvidia expands from chipmaker to AI operating system, tech providers and service providers alike must rethink their positioning. The AI economy is increasingly Nvidia-shaped. Survival and success depend on navigating that terrain with clarity and agility.

If you found this blog interesting, check out our latest Redefining the IT Technology Stack to Enable AI-ready Enterprises report.

If you have questions or want to discuss your AI adoption strategy further, please contact Tanvi Rai ([email protected]) or Deepti Sekhri ([email protected]).